In the world of console gaming, players frequently argue over what matters more: frames per second (FPS) or graphical fidelity. Modern consoles like the PlayStation 5, Xbox Series X, and the upcoming Switch 2 now give players a choice. One mode prioritizes fidelity, running the game at a stable 30fps with maximum detail. The other mode prioritizes performance, hitting 60fps by sacrificing some visual features. Most people can instantly feel the difference between the two modes, but what about even higher frame rates? At what point does our brain stop noticing the increase?

Understanding Frames Per Second (FPS)

For those unfamiliar, FPS measures how often the image on your display changes. Digital video simply refreshes a series of still pictures so quickly that they appear to be in motion.

- Hollywood films: These use a standard 24fps to give movies a cinematic look with natural motion blur.

- Sports broadcasts: Broadcasters typically show sports at 60fps. This allows the viewer to feel like they are watching a live game.

TV and film benefit from pre-recorded footage; because the content already exists, the playback device simply switches images fast enough to match the media’s frame rate. Video games work differently. Since players move and look around at will, the console’s hardware must render every individual frame on the fly.

The Evolution of Console and PC Standards

While relatively new to consoles, PC gamers have long had the option to adjust graphic settings. This is a result of the wide variety of available hardware. From the PS3/Xbox 360 era through the early PS4 years, consoles mostly targeted a steady 30fps. This changed with the PlayStation 4 Pro, the first console capable of native 4K resolution. However, the system used most of the improved hardware just to achieve that resolution. This often came at a performance cost. Furthermore, the benefit of native 4K wasn’t always apparent to gamers, especially those without 4K displays. Consequently, developers began offering the choice between FPS and graphics. This feature carried over into the current generation of hardware.

PC gamers have navigated these waters for years. Even during the Nintendo 64 era, when console games often operated at an abysmal 15fps, PC ports could achieve 60fps. Historically, the refresh rate of the monitor created the only limit. If the FPS didn’t divide evenly into the refresh rate, the image would distort. We know this phenomenon as “screen tearing.” This led to an arms race that lasted until manufacturers introduced Variable Refresh Rate (VRR) in the mid-2010s. This technology allowed displays to sync with a game’s framerate, usually between 30Hz and 144Hz. This enabled competitive gamers to seek out increasingly higher frame rates.

The Science of Perception

It’s easy to think a higher number is always better, especially with the yearly release of increasingly powerful GPUs. As influencers demonstrated “crazy high” framerates on expensive hardware, 60fps suddenly seemed insufficient. However, as we crossed the 100+ fps threshold, many gamers reached the same conclusion. It is difficult to notice improvements past a certain level. That “limit” also seems to vary from person to person.

Fighter Pilots vs. Pro Gamers

Researchers conducted experiments with air combat pilots. At the time, experts considered these pilots to have the best response times on the planet. The researchers showed pilots collections of videos that appeared to be still images, but with one frame changed in a single collection. The team tasked the pilots with identifying which image had changed.

- Average Pilot: Identified a change at 1/180th of a second.

- Top Performer: Identified a change at 1/250th of a second.

Surprisingly, pro gamers smashed these records. In a similar study with professional Counter-Strike players, the highest performer repeatedly detected an image change in 1/500th of a second. The average for the group sat around 1/320th of a second.

Why Eyesight is Tricky

Human eyes and brains process movement differently depending on where it occurs in our field of vision:

- Peripheral Vision: Our ancestors used the sides of their eyes to detect predators. As a result, our peripheral vision can detect movement at around 200fps.

- Direct Vision: Because we weren’t primarily hunters, our forward-facing viewpoint is slower. It detects changes at as low as 20fps.

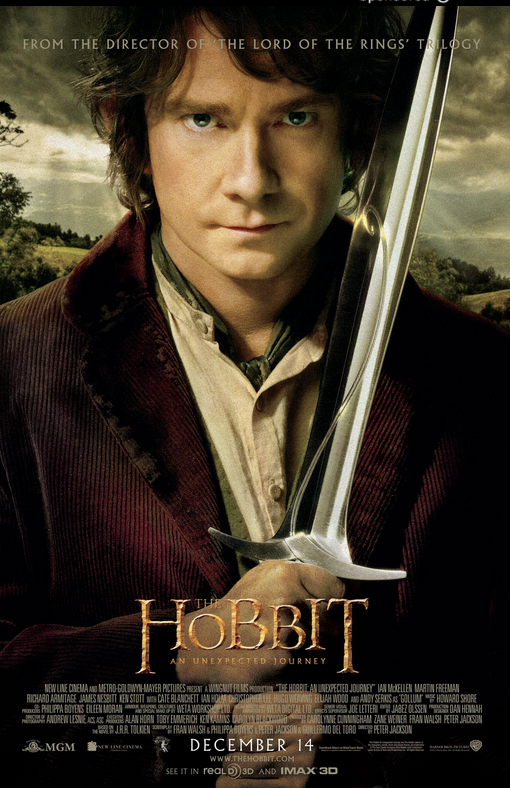

The “Uncanny” Feeling and VR Sickness

When movement feels “unnatural,” our brains often reject it. Many viewers found the high frame rate in Peter Jackson’s The Hobbit “off” or “sped up.” This happened even though it matched the frame rate of a standard football game. Years of viewing have conditioned our brains to expect specific frame rates for different types of media.

This gets even more complicated with Virtual Reality. In the early 90s, Sega attempted to build a VR headset for the Genesis, but testers suffered from severe motion sickness. We now know that for VR, our brains need to view images in the 90fps to 200fps range to believe they are real. Anything lower causes the brain to reject the image. This triggers vertigo and nausea—now known as VR Sickness.

Is Higher FPS Really the Future?

For most gamers, going higher provides no significant benefit. Competitive players will still strive for high numbers, and they do benefit the most. However, the advantage over 144fps is negligible. You might notice a change in the image, but your body cannot physically react to it in time. For everyone else, the benefits of high-fps screens (now seen on many smartphones) remain largely aesthetic. Your eyes, brain, and hands are perfectly content working within the 60–100fps range.